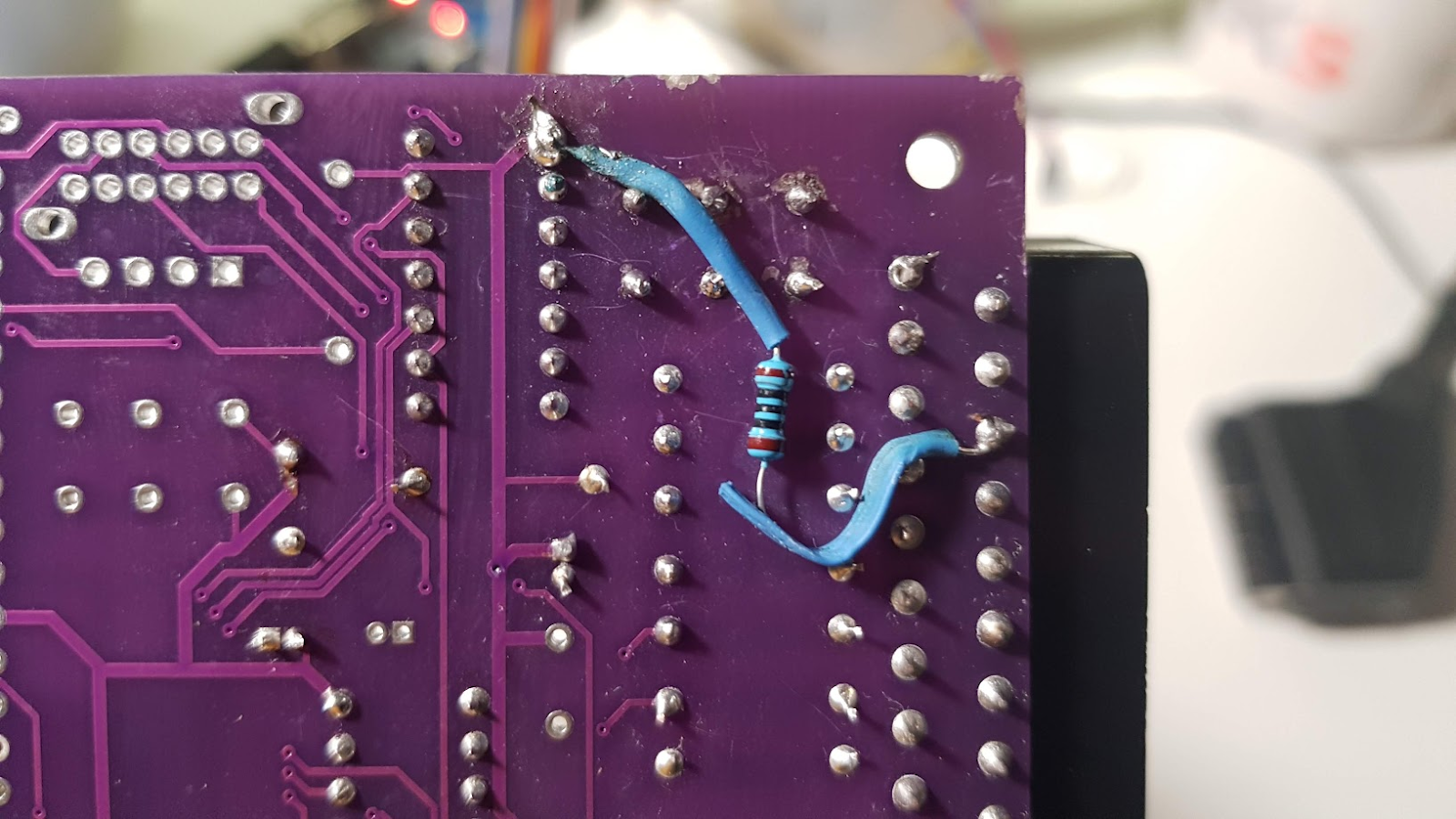

The KAPE GPU is very nearly ready for to be moved from breadboard to a PCB. The last things I wanted to make work, was the sprite handling and making sure I can get a CPU<->GPU interface going relatively easily, as we are already very tight in cycles in the AVR side. The CPU<->GPU interface I solved with adding yet another FIFO - a IDT7203 asynchronous dual parallel 2K FIFO in DIP form. I call this the command queue or CMDQ.

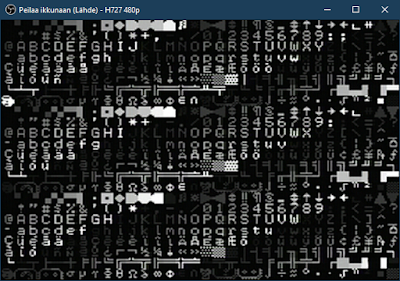

Making the sprites work was a lot more involved though, than just throwing one chip at them. My target for sprite handling was 32 sprites at 25 FPS with "full" CMDQ. What I mean by full is that every frame, it has been filled with commands to change the position of the 32 currently active sprites. The following image has 8 sprites for the player character, 4 sprites for the big rock ball, 19 sprites for little rock balls and 1 sprite for the bullet.

The WRITE_RESET is brought low after every frame has been drawn, and it also signals the test program to send data for another frame. The RX line is a serial data we send to the UNO that acts a faux CPU and sends that data to the GPU through the CMDQ. I get very close to 25 FPS target, with 32 sprites at ~23.6 FPS. I tried to optimize the sprite drawing and tile drawing code as best I could, if anyone has any pointers to optimize it even further, I'm open to suggestions! (There's some code later in this article, and also, I'll publish all the current code to a github for general consumption and post the link here once I'm done)

The Memory Setup

The earlier implementation had only one text mode with a bitmap chargen memory for symbols to draw on screen. The characters were embedded into the AVR flash, and to be able to draw sprites and tilemaps, I'd need RAM. This is one of the reasons I opted to use ATmega1284P as the GPU chip, as it has the most SRAM in the AVR 8bit line. At 16K of internal memory, I actually have enough memory to setup a pattern table with straight up colors. I thought about having a NES-like 2 bits per pixel indexed palette, and having a separate palette register, but decided against it - it would incur a penalty on drawing performance. We want the data to be as straightforward to output as possible to use as little cycles as possible when drawing the sprites and tilemaps.

This reminds me, an earlier decision has come to bite my butt a bit... At some point I decided to pack two pixels to one byte (as my pixels are 4 bit RGBI values) to decrease the time we need for writing the pixel data to the FrameBuffer FIFO. At the time I was only doing a textmode chargen output, and at the time I calculated a 10% improvement in FPS, which felt like a warranted modification. Currently though, the sprite and tile drawing would be a lot more straightforward code-wise, as well as output-wise, and I feel like I don't need to improve text-mode that badly if it hinders the tilemap and sprite drawing. However, I can't really change it back either. To conserver memory as I have so little of it, I need to keep the graphics data in the packed pixel format. To be fair, the tilemap drawing will still be relatively straightforward, as I just output the pixels out as they are, but sprite handling gets a bit hairy, as we need to process each pixel for transparency.

Anyhoo, the 16K of the KAPE GPU is divided into 8K of pattern table memory. This is straight-up packed pixel data in a 16x16 configuration, with 8x8 size per item. This means you can send for use 256 different patterns to be drawn on screen. This pattern table is used both for tilemap drawing (the background) and sprite drawing. Then there is two 32x24 byte arrays to hold character framebuffer and the color buffer. One buffer is 768 bytes, so the both of them take 1.5K.

For sprites we have a 256 item array of sprite struct data. The sprite struct contains the control byte (which has data for whether the sprite is activated yet or not etc.), the screen position in pixels (max [255,191], [0,0] is topleft), the patterntable index that is used to draw this sprite and the color used for transparency. This has been doubled for both high and low nibbles, so that we don't have to calculate this every time we draw the sprites again (yet another drawback of the packed pixel format I'm internally using). This adds up to 6 bytes per sprite, 256 sprites total, so that is also 1.5K.

typedef volatile struct __attribute__ ((__packed__)) sprite_t_s {

uint8_t control;

uint8_t y;

uint8_t x;

uint8_t tile_index;

uint8_t alpha_lo;

uint8_t alpha_hi;

} sprite_t;

There's also a 128 byte long array for linebuffer. The screen is drawn internally line by line, and the sprites are drawn over the tilemap to this array, before then sending out to the FBF (FrameBuffer FIFO). Lastly, there is a 96 byte long array for selecting whether to draw from a pattern table, or to draw from chargen in the combined mode - this combines both graphics and text mode, and you can select with this array which one to use. This is normally not used in the graphics mode, as it incurs a small performance penalty for checking yet another array and doing a bitmask to check whether we use the chargen or patterntable memory (and to be fair it hasn't been even implemented yet), but I feel this is a necessary feature as adding text and numbers to the pattern table restricts the usage even more - 256 different 8x8 patterns is not quite a lot. Of course we could work around this limitation by changing the pattern table memory every frame, but that gets troublesome fast. If I can optimize the switch checking code to be fast enough - we could limit it by not drawing sprites over text mode chargen draws, but preformance wise, I'm not sure how much it would give back. This could of course be controlled just by a per line basis as well, and with blocking sprite drawing we could do status bars etc with text only.

Out of 16K we are using a total of a bit over 11K, and are left with 5K memory to still use. Some of that 5K memory usage is required for global and local C variables, so we can't use it all up, but we could probably assign another 4K for half a pattern table to get more pattern graphics for tilemap and sprite drawing usage, and use the control byte and assign one of the bits to mean that the second pattern table is being indexed.

Graphics Modes

The current software design has 4 graphics modes, although only 3 of them have been implemented as of now.

- Mode 0: 32x24 Text Mode

- Mode 1: 256x192 Tiled Graphics

- Mode 2: 32x24/256x192 Combined Text/Tiled Graphics

- Mode 3: 128x96 Lores PixelBuffer

Modes 0, 1 and 3 have been implemented. The Mode 0, text mode, has a 32x24 character framebuffer, and a 32x24 color buffer, with background color being on the low nibble and foreground color being on the high nibble.

The Tiled Graphics mode, Mode 1, re-uses that character framebuffer to mean the tiled background indices to the pattern table, and also uses the sprite memory to draw sprites from the pattern table. The sprite drawing handles transparent color, which defaults to 0 (or black), but can be changed to any of the 16 colors. The tilemap drawing does not use transparency.

Mode 3, or the Lores PixelBuffer, re-uses the pattern table memory, and interprets it as chunky, linear memory that is straight up just copied to the FBF (FrameBuffer FIFO). Mode 3 is the fastest at ~10ms (~100 FPS) at copying the screen to FBF, for quite obvious reasons (it's just straight up copying from internal memory to external memory). However, sending the updated frame information to the KAPE GPU takes a minimum of 3 frames (4 actually, as we need 1 byte at least for the command to update the screen, and then 6K for the data) as the CMDQ is only 2K in size. That means we get to around 40 ms per frame (~25 FPS) with 3 frames showing an intermediate update image. So this mode isn't really good for anything else than to show static 128x96 images, but it does work well in that.

For future improvements, we could increase either the CMDQ size by adding 3 IDT7203 or having 2 IDT7204 or only one IDT7205 as a framebuffer. I won't be using the AL422B (the current FBF chip) for CMDQ as it's a lot harder chip to drive and use, whereas the IDT720x chips are as simple as sliced bread. We could also implement the extra 4K pattern table space to increase pattern table memory to 12K, which is incidentally the same amount of memory needed for 2 128x96 RGBI images. This would enable a double-buffering method, to show one image while writing the other one. Actually, we could do this right now with some clever programming and using the fact that the FBF can be read reset independently of the write, and can show old data if it wants to. Let's just add a command to write the internal buffer out to the FBF (CMD_FLUSH_FRAMEBUFFER?), and prevent automatic updating of the FBF when in Mode 3, and just send that flush command after a new frame has been sent. This would give us around 25 FPS fullscreen 128x96 chunky graphics mode. Food for though for later...

Mode 0 is quite simple, though there is some memory handling and byte manipulation to turn a 1bit bitmap to colored text on screen. For each character we have 8 bytes where each bit represents whether to use the background color or the foreground color. The background and foreground color for the current character we check from the color buffer, so it's settable individually for each onscreen character.

First we extract both colors from the packed pixel format, to FG_COLOR and BG_COLOR. Then we create a high nibble of them both, FG_COLOR2 and BG_COLOR2. After this all we have to do check the characters bits on the current rows byte, in 2 bit sets, and or the correct combination of FG/BG_COLOR and FG/BG_COLOR2.

;extract color from color buffer

ld rBG_COLOR, Z+ ; get color buffer byte

mov rFG_COLOR2, rBG_COLOR

andi rFG_COLOR2, 0xF0 ; Foreground color is in high nibble

andi rBG_COLOR, 0x0F ; background color is in low nibble

; shift right four times to get the other nibble for foreground color

mov FG_COLOR, rFG_COLOR2

lsr rFG_COLOR

lsr rFG_COLOR

lsr rFG_COLOR

lsr rFG_COLOR

;multiply by 16 (shift 4 bits left) for the other nibble for background color

ldi r25, 16

mul rBG_COLOR, r25

mov rBG_COLOR2, r0

After extracting the color information, we go on and check the chargen bitmap to select the correct color for each pixel, 2 pixels at a time. We repeat this for 8 times and then go for the next character in this row.

; --- Pixel 7 and 6

mov rCOLOR, rBG_COLOR ; preload the pixel with the bg color

sbrs rCHAR,7 ; check the 8th bit, if set, skip fg color

mov rCOLOR, rFG_COLOR ; if not set, set the fg color for the pixel

; same thing as on the last pixel, but we use r25 as a temporary register

mov r25, rBG_COLOR2

sbrs rCHAR,6

mov r25, rFG_COLOR2

; now we just combine the register together to one register, and move

; that to the linebuffer

or rCOLOR, r25

st X+, rCOLOR

In Mode 1, the Tiled Graphics mode, we use the character screen buffer the same way as in text mode, but instead of the index pointing to a chargen, we point it to a pattern table. The pattern table in tiled mode is simply copied 4 bytes per "character" (so 8 pixels) at a time, and the biggest overhead here comes from keeping track and calculating the proper offsets for different places in the memory. The patterntable has all of one tiles data in one block, so it isn't rectangularly organized. I think this de-complicates offset calculations, but I'm not actually sure - just a feeling that it would get a lot more complicated if I stored the patterns in memory as though it was a big image where I cut parts out and copy them, instead of just calculating in a linear fashion the offset with a format where all of one pattern's data is back-to-back. Here is the full line drawing code for tilemaps in Mode 1:

1: ; loop x char

.equ r2PXL,19

.equ rINDEX,20

; Setup address pointer for pattern table

ldi ZL, lo8(scr_buffer_graphics_pattern_table)

ldi ZH, hi8(scr_buffer_graphics_pattern_table)

ld rINDEX,Y+

ldi r25,32 ; 32 bytes per pattern

mul rINDEX,r25 ; index multiplied by data width to get offset

add ZL,r0

adc ZH,r1

ldi r25, 7

and r25,rPY ; which line is it from 0 to 7?

lsl r25

lsl r25 ; multiply this by 4 (two left shifts) to get the offset

add ZL,r25

adc ZH,rZERO

; write 4 bytes to linebuffer

ld r2PXL,Z+

st X+,r2PXL

ld r2PXL,Z+

st X+,r2PXL

ld r2PXL,Z+

st X+,r2PXL

ld r2PXL,Z+

st X+,r2PXL

inc rTX

cpi rTX, SCR_TEXT_WIDTH

brne 1b ; loop x char

Now we get to the "complicated" part. Well, it's not really that more complicated than the text mode character drawing or the tilemap pattern drawing, but there are some... things we need to take into account. First of all, we really don't want to calculate everything for all sprites every line, since not all sprites are always active. For that, we have an active bit in the control -byte, and every line we check for sprites active bit, and if it's not set, we skip to the next sprite. This is subpar though: this means we need to check 256 sprites for their active bit, for all 192 lines. One check takes 8 cycles, 224 inactive sprites and 192 lines, we get ~344K cycles for checking inactive sprites! That's a lot (about 1/3 of the cycles used for all other drawing)! However, all my attempts to optimize this have been in vain. If I hardcode the sprites to be max 32, I actually can increase the speed to 50 FPS! Even if I increase this to 64 sprites (where 32 sprites are inactive) I can still get over 40 FPS, so it might be a viable option to just limit the sprite count to 32 or 64.

One possible method would be to keep a list of active sprites per line, and only draw these sprites, but that would a) transfer the calculations from the drawing routine to the CMDQ handling routines and b) require more memory. That might still be a lot faster than just checking for all sprites on every line, but my earlier attempts at this got real complicated real fast. I might revisit this later, but for now, I'm fine with limiting the sprite count to 32. If I keep most enemies at 1 - 4 sprites, and the main character at 8 sprites, I should get player character + from 5 to 23 other moving objects and/or enemies.

The sprite drawing itself though, that's a simple repetition of the pattern drawing. We simply calculate the correct X position in the linebuffer, and draw over the contents in there. For transparency, we need to first read it, then extract the pixels in the nibbles, and compare the sprite color with the transparency color. If it is transparent, use the linebuffer pixel, and if it's not, use the sprite pixel. The correct X position though gets a bit hairy to calculate. Yet another drawback of packing the pixels in two nibbles in one byte, is that drawing a sprite starting and ending at mid-byte gets quite complicated. Complicated enough infact that I haven't wanted to think about it at all, and just decided that I can live with a small little quirk: sprites can only be drawn starting from even X positions. After we get more involved with Wreckless Abandond development, we can get back to this and try to fix it, in case the 2 pixel minimum movement gets too jarring.

CMDQ

One last thing before I drop out of this long wall-of-text-of-a-post. To communicate with the GPU and send pattern data and other commands to it, I slapped on another FIFO chip, the IDT7203, between the GPU and CPU (as mentioned at the start of this post). This works as an asynchronous interface to the GPU, and is only writeable on the CPU side. To make sure you know what state you are in, you need to reset the GPU with a command of 0xFF, and do that by sending it 34 times to make sure it enters a known state. (The maximum argument bytes in any command is 33, the CF_CMD_SEND_PATTERN_DATA(0x80), which takes 1 byte for the pattern table index and 32 bytes as the packed pixel color data. We just need to make sure we escape out of this command, so sending 33 reset commands escapes out of it, and the 34th command makes sure it's actually consumed properly)

Here is the current command list:

CF_CMD_SEND_CHARACTER 0x00

CF_CMD_SEND_COLOR 0x01

CF_CMD_SET_INDEX 0x10

CF_CMD_SET_SPRITE 0x20

CF_CMD_SET_SPRITE_ACTIVE 0x21

CF_CMD_SET_SPRITE_NOT_ACTIVE 0x22

CF_CMD_SET_SPRITE_INDEX 0x25

CF_CMD_SET_SPRITE_X 0x26

CF_CMD_SET_SPRITE_Y 0x27

CF_CMD_SET_SPRITE_HOTSPOTX 0x28

CF_CMD_SET_SPRITE_HOTSPOTY 0x29

CF_CMD_SET_SPRITE_ALPHA_COLOR 0x30

CF_CMD_SETMODE_TEXT 0x40

CF_CMD_SETMODE_GRAPHICS 0x41

CF_CMD_SETMODE_COMBINED 0x42

CF_CMD_SETMODE_LORES 0x43

CF_CMD_CLEAR_SCREEN 0x4A

CF_CMD_SET_COMBINED_BITMASK 0x50

CF_CMD_SEND_PATTERN_DATA 0x80

CF_CMD_RESET_GPU 0xff

The Sprite Hotspot set routines, and the Combined mode functions are the only things not yet implemented. I might skip the Hotspot stuff completely, to keep the sprite drawing code as fast as possible. The idea behind the hotspot code was so that you could draw sprites at [0,0] and still only show the one pixel from the lower left corner of the sprite, to be able to show sprites moving in and out of the screen on all sides. It's not that penalizing to performance (just a register copy, bitmask and, some shifts depending on which one we are checking for, and just adding the value to the currently used x and y), but even "small" things like have a tendency to blow up. So, for now, I'm happy with the way it is currently implemented, and might remove the commands altogether.

Currently I'm fairly confident with this HW design, as far as early prototypes go. There is one thing I'm going to add to this though - a single serial line, unidirectional, from GPU AVR to PT (PixelTimer) AVR, and implement bitbanging serial from GPU to PT for changing the pixel timing values. Currently everything is hardcoded in the PixelTimer, and I'd like that I'd be able to change the timings between NTSC, PAL, progressive/interlaced, non-standard modes etc. from software. For that though, I can just hook a line from one of the free pins on the GPU AVR to one of the free pins in the PT AVR, and I can implement the software later.

Is it over yet?

Phew, this was a long, long post with lots of technical details. If you managed to read this far, I commend your resolve! Hopefully it was interesting for you, and as always, all comments, feedback, constructive criticism and help is appreciated! I sometimes stream working on this project on Twitch at https://www.twitch.com/zment in case you are interested in following the progress live. I'm not doing it that often though and I stream very rarely these days anyhow, but you never know!

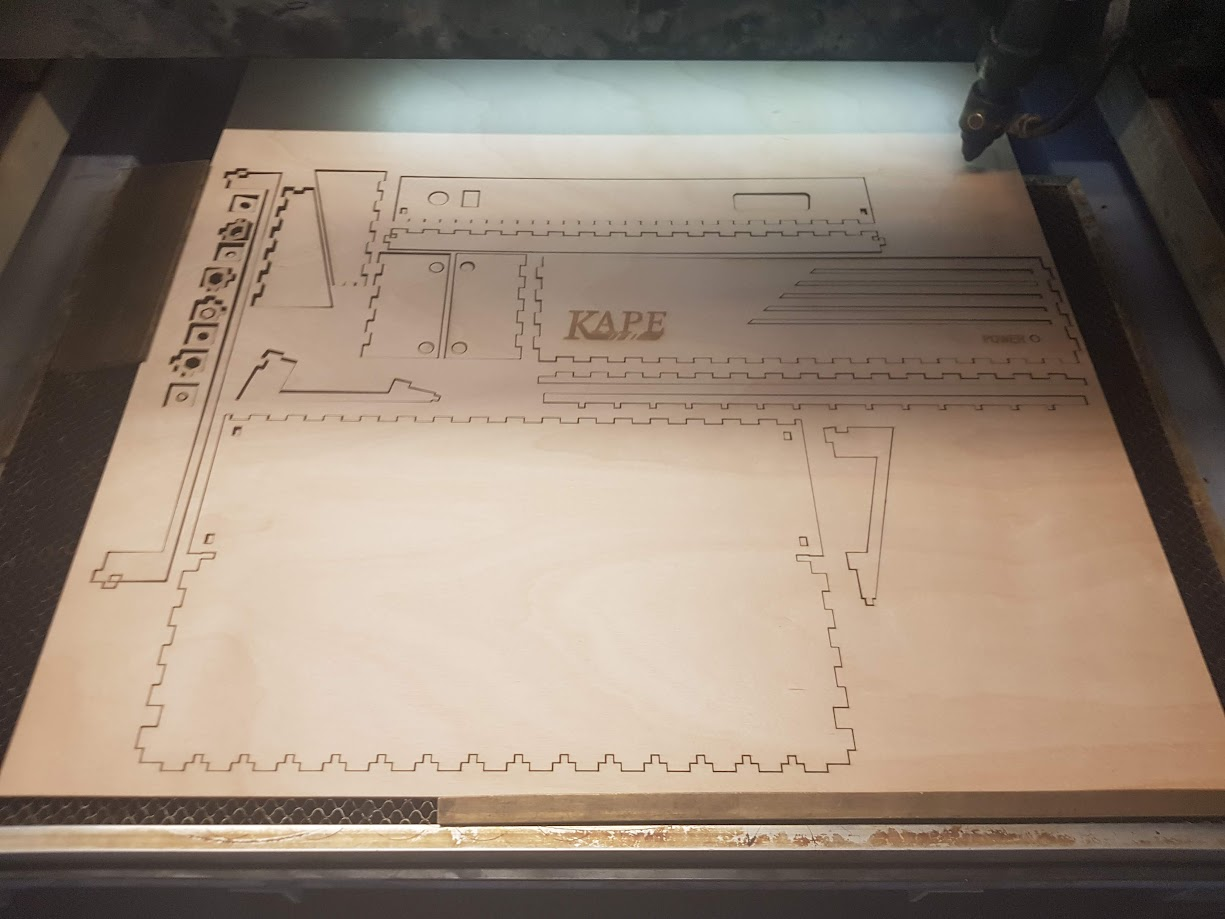

Currently I'm moving on from the GPU to the CPU side, and I've already setup some preliminary 6502 hardwired testing. Next up: PCB design for the GPU with KiCad, and 6502 CPU setup with 64K memory and 6551 serial and communicating between PC and the CPU!